PhD Student at Carnegie Mellon University, The Robotics Institute

PhD Student at Carnegie Mellon University, The Robotics InstituteHi! I’m a Ph.D. student at The Robotics Institute, Carnegie Mellon University, where I’m fortunate to be advised by Prof. Oliver Kroemer.

My research focuses on building intelligent robotic systems that can learn complex skills and generalize them to new environments with zero to minimal supervision.

I develop algorithms for robot learning, with an emphasis on skill acquisition and transfer,

action-effect prediction, affordance understanding, and learning from demonstration.

I'm particularly interested in combining robot manipulation with deep learning,

perception, foundational models, LLM/VLMs,

symbolic reasoning and data-efficient optimization techniques to enable robots to adapt quickly and robustly to real-world scenarios.

Ultimately, my goal is to bridge the gap between low-level control and high-level reasoning— empowering robots to understand, plan, and act in ways that are as versatile and intuitive as humans.

Hi! I am a Ph.D. student at The Robotics Institute, Carnegie Mellon University working with Prof. Oliver Kroemer. I focus on robot learning, including skill transfer, affordances, and foundational models for intelligent manipulation. My goal is to help robots learn complex tasks with minimal supervision and generalize them to new scenarios.

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

Carnegie Mellon UniversityThe Robotics Institute, School of Computer Science

Carnegie Mellon UniversityThe Robotics Institute, School of Computer Science

Ph.D. StudentSep. 2022 - present -

Bogazici UniversityMSc and BSc in Computer Science

Bogazici UniversityMSc and BSc in Computer Science

Istanbul, Turkiye

Academic Experience

-

Research Assistant, CMUIntelligent Autonomous Manipulation Lab (IAM LAB)2022 - present

Research Assistant, CMUIntelligent Autonomous Manipulation Lab (IAM LAB)2022 - present -

Teaching AssistantCarnegie Mellon University Bogazici University2024 - 2025 2019 - 2020

Teaching AssistantCarnegie Mellon University Bogazici University2024 - 2025 2019 - 2020 -

Research Assistant Intern, University of TokyoCognitive Developmental Robotics Lab (Nagai LAB)2020

Research Assistant Intern, University of TokyoCognitive Developmental Robotics Lab (Nagai LAB)2020 -

Research Assistant, Bogazici UniversityCognition, Learning and Robotics Lab (Colors LAB)2017 - 2022

Research Assistant, Bogazici UniversityCognition, Learning and Robotics Lab (Colors LAB)2017 - 2022

Work Experience

-

Spiky AILead ML Research Engineer2020-2022

Spiky AILead ML Research Engineer2020-2022

Projects

-

ARM/MFI - Grounded Task-Axis Skills for Generalizable Robot Manipulation

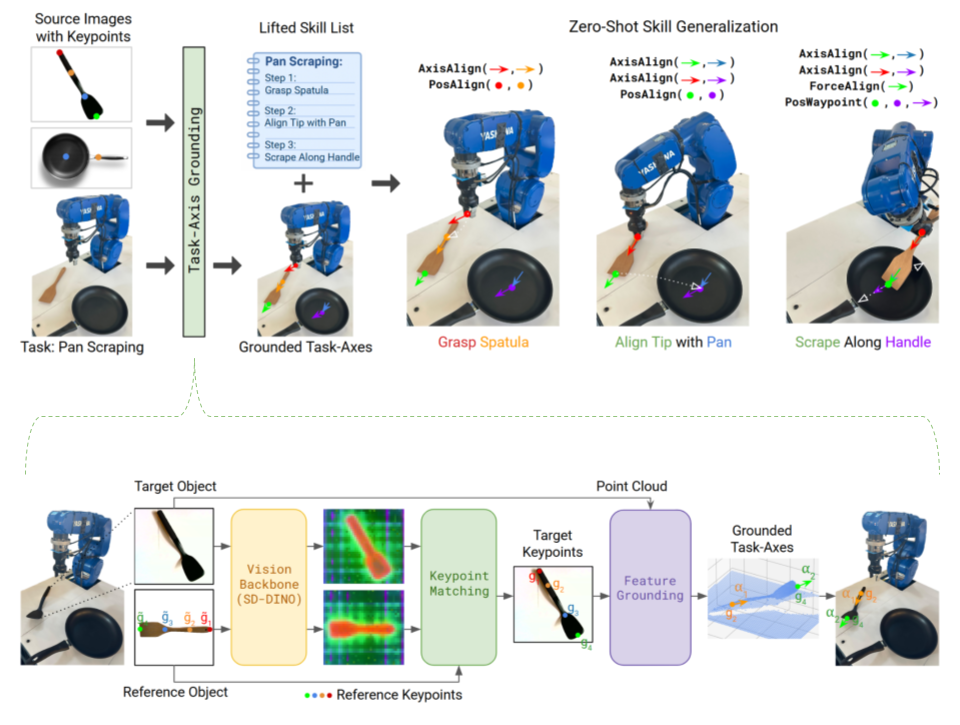

ARM/MFI - Grounded Task-Axis Skills for Generalizable Robot ManipulationThis project introduces Grounded Task-Axes (GTA), a novel framework for enabling zero-shot robotic skill transfer by modularizing robot actions into interpretable and reusable low-level controllers. Each controller is grounded using object-centric keypoints and axes, allowing robots to align and execute skills across novel tools and scenes without any training.

- Modular Controller Design: Skills like screwing, wiping, and inserting are composed from prioritized task-axis controllers (e.g., PosAlign, AxisAlign, ForceSlide) that operate within each other's nullspaces.

- Visual Foundation Models: To generalize across objects, we use foundation models (e.g., DINOv2, SAM) to find semantic and geometric correspondences between keypoints on example and current objects.

- Skill Composition for Manufacturing: In the MFI-ARM project, we use these skills in the context of the NIST assembly box task, defining lifted skills using abstract axes and grounding them for unseen CADs or image inputs, making the system scalable to new tasks and tools.

This project bridges traditional control theory with modern visual reasoning, offering interpretable, adaptable, and sample-efficient (zero-shot) skill transfer for real-world manufacturing and manipulation tasks.

This project introduces Grounded Task-Axes (GTA), a zero-shot skill transfer framework that enables robots to generalize manipulation skills across novel tools using visual foundation models and modular task-axis controllers.Jan. 2024 - Ongoing -

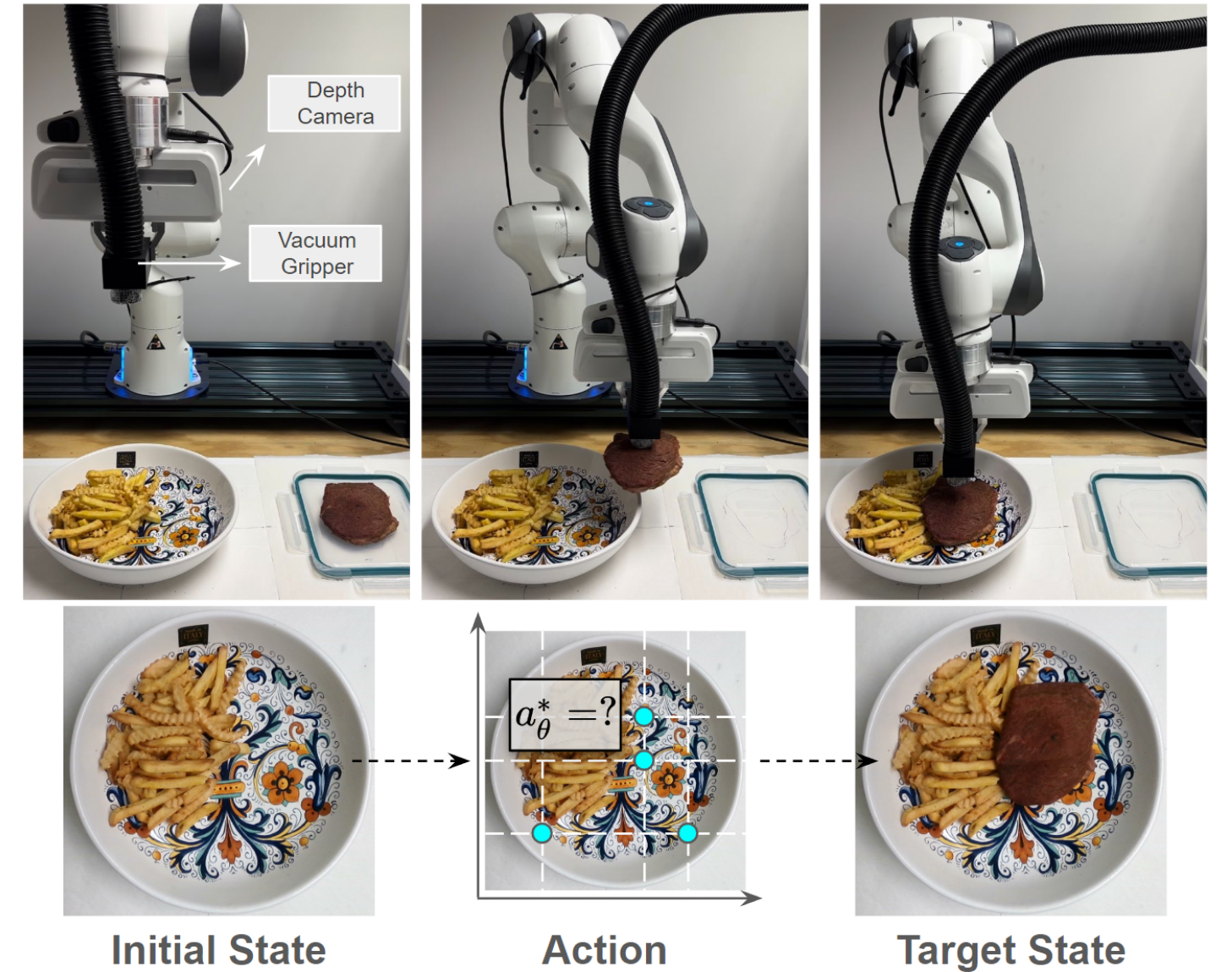

Sony AI - Precise Food ManipulationThis project tackled the challenge of precise food manipulation and plating, requiring robots to interact with deformable and rigid foods with high accuracy. It had two main components:

Sony AI - Precise Food ManipulationThis project tackled the challenge of precise food manipulation and plating, requiring robots to interact with deformable and rigid foods with high accuracy. It had two main components:

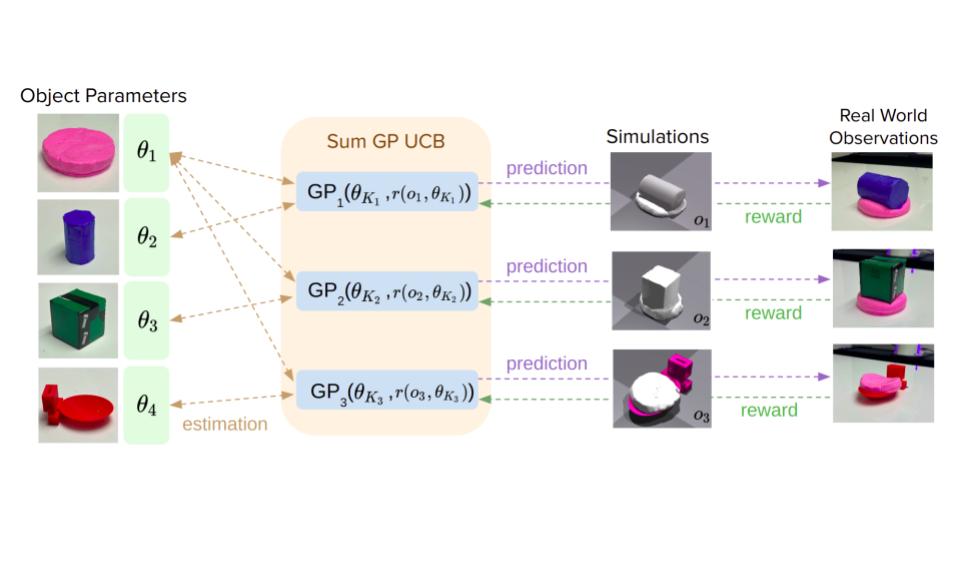

- Material Property Estimation: We proposed a Bayesian optimization framework (Sum-GP-UCB) to estimate material properties (e.g., mass, stiffness, Poisson ratio) from real-world interaction scenes.

- Multi-Model Planning for Plating: We built a system that switches among heuristic, learned, and simulation-based predictive models to optimize placement actions using Model Deviation Estimators (MDEs).

This project with Sony AI developed a multi-modal planning system and used Bayesian optimization to help robots perform precise plating and food manipulation tasks.Aug. 2022 - Jan 2024

News

Selected Publications (view all )

Grounded Task Axes: Zero-Shot Semantic Skill Generalization via Task-Axis Controllers and Visual Foundation Models

M. Yunus Seker, Shobhit Aggarwal, Oliver Kroemer

Humanoids 2025 - Accepted

Grounded Task Axes (GTA) introduces a zero-shot skill transfer framework that enables robots to generalize manipulation tasks to unseen objects by grounding modular controllers (like position, force, and orientation) using vision foundation models. It allows robots to perform complex, multi-step tasks—such as scraping, pouring, or inserting—without training or fine-tuning, by matching semantic keypoints between objects.

Grounded Task Axes: Zero-Shot Semantic Skill Generalization via Task-Axis Controllers and Visual Foundation Models

M. Yunus Seker, Shobhit Aggarwal, Oliver Kroemer

Humanoids 2025 - Accepted

Grounded Task Axes (GTA) introduces a zero-shot skill transfer framework that enables robots to generalize manipulation tasks to unseen objects by grounding modular controllers (like position, force, and orientation) using vision foundation models. It allows robots to perform complex, multi-step tasks—such as scraping, pouring, or inserting—without training or fine-tuning, by matching semantic keypoints between objects.

Leveraging Simulation-Based Model Preconditions for Fast Action Parameter Optimization with Multiple Models

M. Yunus Seker, Oliver Kroemer

[Paper] [ArXiv] [Video] [Presentation]

IROS 2024 - Accepted

This paper presents a framework that optimizes robotic actions by choosing between multiple predictive models—analytical, learned, and simulation-based—based on context. Using Model Deviation Estimators (MDEs), the robot selects the most reliable model to quickly and accurately predict outcomes. The introduction of sim-to-sim MDEs enables faster optimization and smooth transfer to real-world tasks through fine-tuning.

Leveraging Simulation-Based Model Preconditions for Fast Action Parameter Optimization with Multiple Models

M. Yunus Seker, Oliver Kroemer

[Paper] [ArXiv] [Video] [Presentation]

IROS 2024 - Accepted

This paper presents a framework that optimizes robotic actions by choosing between multiple predictive models—analytical, learned, and simulation-based—based on context. Using Model Deviation Estimators (MDEs), the robot selects the most reliable model to quickly and accurately predict outcomes. The introduction of sim-to-sim MDEs enables faster optimization and smooth transfer to real-world tasks through fine-tuning.

Estimating material properties of interacting objects using Sum-GP-UCB

M. Yunus Seker, Oliver Kroemer

[Paper] [ArXiv] [Video] [Presentation]

ICRA 2024 - Accepted

This paper introduces a Bayesian optimization framework to estimate object material properties from observed interactions. By modeling each observation independently and focusing only on relevant object parameters, the method achieves faster, more generalizable optimization. It further improves efficiency through partial reward evaluations, enabling robust and incremental learning across diverse real-world scenes.

Estimating material properties of interacting objects using Sum-GP-UCB

M. Yunus Seker, Oliver Kroemer

[Paper] [ArXiv] [Video] [Presentation]

ICRA 2024 - Accepted

This paper introduces a Bayesian optimization framework to estimate object material properties from observed interactions. By modeling each observation independently and focusing only on relevant object parameters, the method achieves faster, more generalizable optimization. It further improves efficiency through partial reward evaluations, enabling robust and incremental learning across diverse real-world scenes.

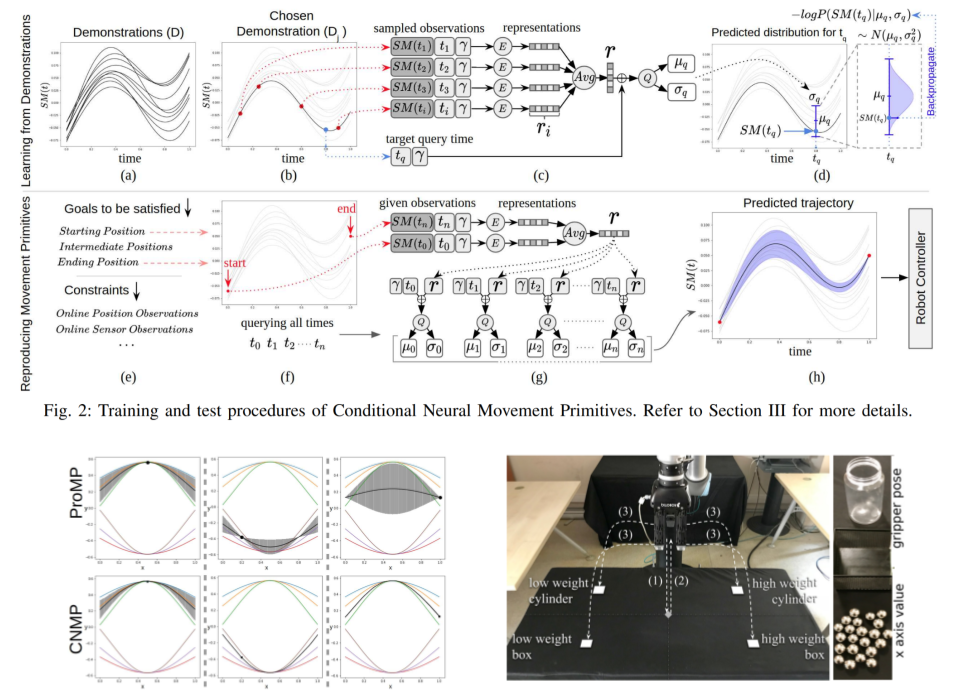

Conditional Neural Movement Primitives

M. Yunus Seker, Mert Imre, Justus Piater, Emre Ugur

RSS 2019 - Accepted

Conditional Neural Movement Primitives (CNMPs) are a learning-from-demonstration framework that enables robots to generate and adapt complex movement trajectories based on external goals and sensor feedback. Built on Conditional Neural Processes (CNPs), CNMPs learn temporal sensorimotor patterns from demonstrations and produce joint or task-space motions conditioned on goals and real-time sensory input. Experiments show CNMPs can generalize from few or many demonstrations, adapt to factors like object weight or shape, and react to unexpected changes during execution.

Conditional Neural Movement Primitives

M. Yunus Seker, Mert Imre, Justus Piater, Emre Ugur

RSS 2019 - Accepted

Conditional Neural Movement Primitives (CNMPs) are a learning-from-demonstration framework that enables robots to generate and adapt complex movement trajectories based on external goals and sensor feedback. Built on Conditional Neural Processes (CNPs), CNMPs learn temporal sensorimotor patterns from demonstrations and produce joint or task-space motions conditioned on goals and real-time sensory input. Experiments show CNMPs can generalize from few or many demonstrations, adapt to factors like object weight or shape, and react to unexpected changes during execution.